LINKS WE LIKE #31

Online Harassment and AI Content Moderation: Countering Threats to Freedom of Expression

The emergence and popularity of new social networks has opened space for a multitude of virtual interactions between people from diverse backgrounds. Consequently, the internet allows anyone to become a “communicator”, with access to a wide audience to share their views. With the virtual environment becoming one of the most important centers of public debate, innovative policies and measures that reduce online attacks and potential harm against vulnerable groups, while also guaranteeing the participation of minorities in the digital landscape, are needed. While human moderation has served as the traditional modality towards that end , the sheer vastness of content generated daily online has created the need for AI to act as an additional tool in the fight against harmful content.

Feminist studies understand online harassment (also referred to as online bullying) to be an expression of broader cultural patterns that relegate women to an inferior position in society. Additionally, recent literature discussing online harassment and hate speech draws a sharp distinction between harassment directed at a person for being part of a specific group and from offense directed at a person for their views, with the consequences on the first group being more profound. Subsequently, groups that already suffer from discursive violence and inequalities are potentially more vulnerable to becoming the targets of online harassment.

According to the literature, women are more likely than men to stop publishing content or engaging in social media after experiencing online attacks. These studies also show how online bullying directed at women is unique in that it generally expresses as hyperbolic offenses and sexualized mockery. In many cases, the attacker further characterizes the victim as unintelligent, hysterical and ugly, and makes threats and/or relays fantasies about violent sexual acts generally thought of as ways to “correct” the women or girls’ behavior.

For women, the experiences of other women —and, consequently, their knowledge of the risks of being subject to similar situations— can generate fear, even if they themselves have not previously gone through the same harassment. One of the most critical findings of the Data & Society survey is that online bullying, in addition to causing fear and other emotional symptoms, makes vulnerable groups more careful —if not reluctant— to express their views on the internet: four out of ten women say they have self-censored to prevent online harassment. Black and LGBTQIA individuals also tend to be more self-censoring than Whites and heterosexual people. For this reason, harassment can be a threat to the very ideals of equality and equitable democratic participation.

This edition of Links We Like explores the ways in which AI can be utilized to identify, measure and curtail online harassment, in order to create a safer and more equitable online experience for users of all backgrounds, thus helping to democratize freedom of expression in the digital landscape.

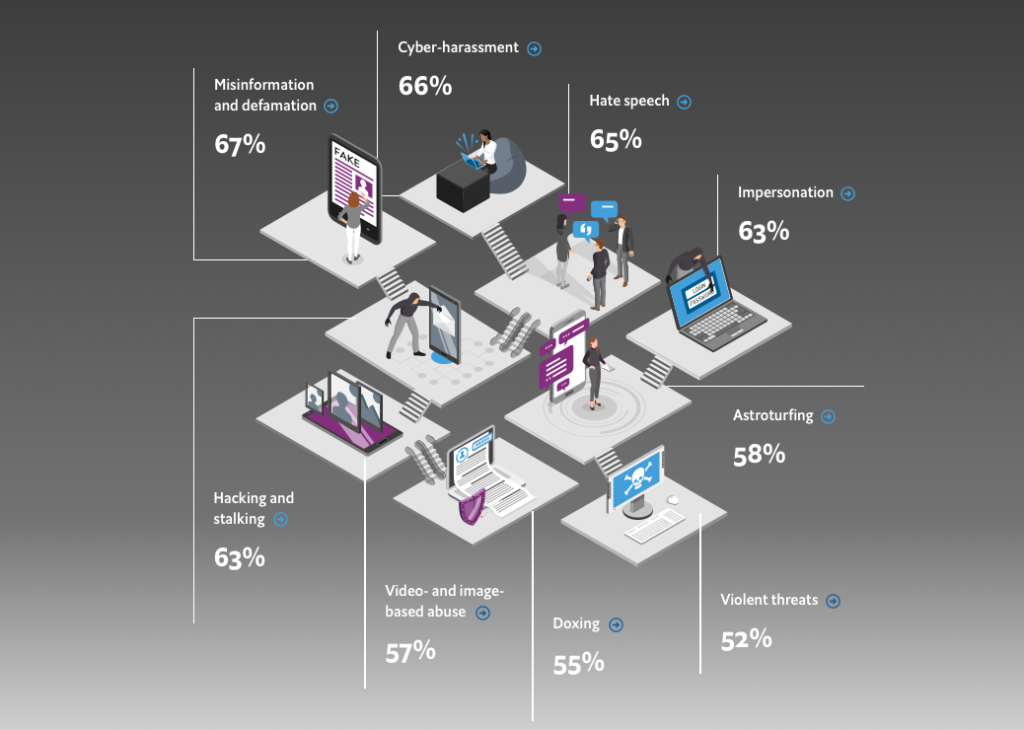

This article opens with the following quote: “The internet presents a double-edged sword for women”, which immediately illustrates the unfortunate realities women face online. This “double-edged sword” metaphor emerges from the fact that the internet presents new dangers to women, while also giving them access to new spaces and communities to seek support and opportunities. Leveraging the power of data-driven storytelling, this EIU study includes the top 51 countries by number of online users, and addresses issues such as regional differences in online violence prevalence (of which the Middle East leads), lack of government support, frequency of underreporting, and overall impacts and actions taken by those experiencing abuse online. One of the most interesting findings is the list of nine threat tactics that predominate the digital landscape: misinformation and defamation (67%), cyber-harassment (66%), hate speech (65%), impersonation (63%), hacking and stalking (63%), astroturfing (58%), violent threats (52%), doxing (55%), and video-and image-based abuse (57%). To learn more about each tactic, click on the link above.

In this blog post, Senior Policy Advisor Sebnem Kenis sheds light on the problematic issues of AI-based content moderation and techno-solutionism. While it is easy for legislators, governments and even companies to put their faith in innovations and “content-policing” conducted by tech companies, Kenis argues that most of the online harms —such as hate speech, cyber harassment and even violent extremism— are deeply rooted in homo/transphobia, patriarchy, racism, ultranationalism and poverty. In fact, AI-powered solutions have proven ineffective in preventing online harm and related human rights violations. The author further explains that when algorithms fail to detect certain harmful or violent content, they can actually contribute to their spread. For example, in 2018 the UN accused Facebook of mobilizing genocide in Myanmar. Likewise, freedom of expression and access to information are infringed when legitimate political expressions are removed by automatic filters. Kenis brings up cases of leftist and LGBTIQ+ content, as well as examples from Colombia, India and Palestine to emphasize the point. While AI-powered solutions are useful tools for content moderation, it is unwise to pin our hopes on them alone to address deeper and more complex societal issues.

This article makes the case for the usage of AI, both before content is shared and after it has been published, to prevent inappropriate, hateful or false information from going viral. Although the article recognizes the great challenge this implies, it also offers options to improve human post hoc moderation. An option is to focus on pre-published content by suggesting the implementation of systems that elicit self-moderation from social media and online platform users before they post questionable content. This option could also be paired with AI technology. For example, AI could be used to examine platforms’ archives of previously removed content, and trained (i.e. using a machine learning algorithm) to assess the likelihood that new content may violate community standards. Therefore, if a post has more than 75 percent likelihood of violating platform policies, the user would be asked to re-evaluate their decision to post. If the content is still published, it would be flagged for inspection by a human moderator. By outlining these moderation alternatives, the authors seek to move the conversation forward and improve the existent post hoc moderation process by making it much more effective and participative.

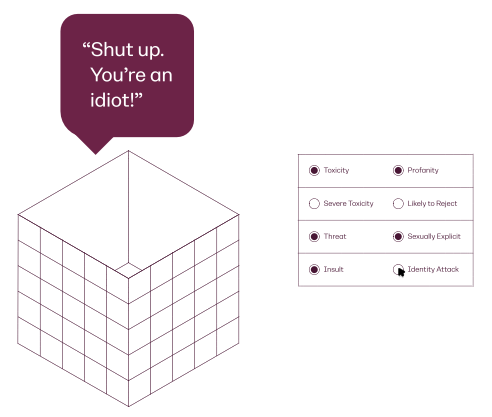

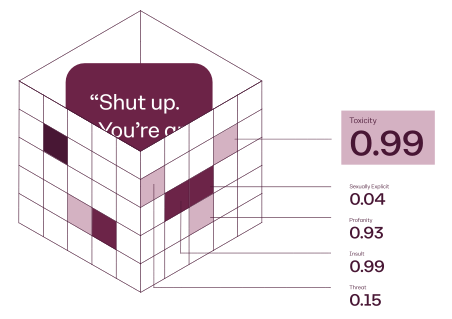

The “Perspective API” project emerged from a collaborative research effort between Google’s Counter Abuse Technology team and the Jigsaw Initiative. Perspective is a free API trained with machine learning models to identify “toxic” comments in online conversations. The model uses a scoring system to determine the perceived impact a text can have in such conversations. The scores are determined by different attributes; including toxicity, profanity, threats, explicit sexuality, identity attacks, insults and severe toxicity. The project’s goal is to help human moderators to review comments faster and more efficiently, while also helping readers filter out language that is harmful or toxic. The tool can be integrated into different platforms and spaces, such as comment sections or forums, and is currently being used by Reddit, The Wall Street Journal, The New York Times, Le Monde and other important publications across the world.

YouTube Chief Product Officer, Neal Mohan, made a statement last year saying that the lack of human oversight over content removal led to machine-based moderators taking down 11 million videos which did not violate any community guidelines. This article examines AI’s role in content moderation, delving into its benefits and limitations, but first it explains how AI uses content-based moderation (in the form of natural language processing) to analyse text and images and determine whether they contain hate speech, harassment, and/or fake news. Though these methods are not foolproof, as demonstrated by YouTube’s example, the article argues that they are much more efficient and effective than relying solely on human content moderators; both in terms of the amount of content that can be reviewed and the resultant mental health trauma faced by moderators, including post-traumatic stress disorder. However, the author does note that because technology has not advanced to the point where it can perfectly assess whether a piece of content is harmful or not, the best alternative remains for a hybrid human-AI moderation system.

Further Afield

Online Harassment:

- Online Harassment Isn’t Growing—But It’s Getting More Severe

- Online Harassment 2017

- Defining ‘Online Abuse’: A Glossary of Terms

- Techdirt Podcast Episode 268: A New Approach To Fighting Online Harassment

- Harvard Business Review. You’re Not Powerless in the Face of Online Harassment

- Online Harassment and Content Moderation: The Case of Blocklists

Content moderation strategies and algorithms

- The impact of algorithms for online content filtering or moderation

- Algorithmic misogynoir in content moderation practice

- Platforms Should Use Algorithms to Help Users Help Themselves

- Online Harassment and Content Moderation: The Case of Blocklists

- OHCHR. Moderating online content: fighting harm or silencing dissent?

- Freedom of Expression and Its Slow Demise: The Case of Online Hate Speech (and Its Moderation/Regulation)

- Shadows to light: How content moderation protects users from cyberbullying

Social media content moderation

- Double Standards in Social Media Content Moderation

- Moderating Hate Speech and Harassment Online: Are Internet Platforms Responsible for Managing Harmful Content?

- Social Media Platforms Claim Moderation Will Reduce Harassment, Desinformation and Conspiracies. It Won’t.

- Tools and strategies for online moderators to address abuse on social media

- Hate Speech on Social Media: Content Moderation in Context

AI and content moderation

- AI and Content Moderation – The Ethics of Digitalisation

- The Role of AI in Content Moderation

- Leveraging AI and Machine Learning for Content Moderation

- How AI Is Learning to Identify Toxic Online Content

- This New Way to Train AI Could Curb Online Harassment

- Predicting Cyberbullying on Social Media in the Big Data Era Using Machine Learning Algorithms: Review of Literature and Open Challenges

- Can data analytics and machine learning prevent cyberbullying?