DISCUSSION PIECE

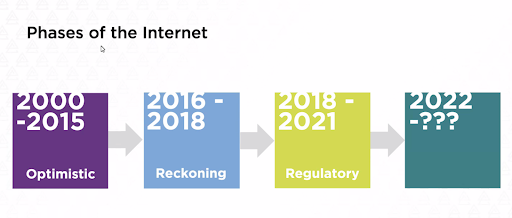

In the last ten years, the world has watched the power and influence of tech platforms grow, largely unregulated by law, while also becoming increasingly interdependent with media and politics. Along with the recent and rapid digitalization of news production, distribution, and consumption, these structural changes have created a media environment in which online disinformation thrives. Subsequently, concerns over its detrimental impact on democracy have begun to dominate public, academic and regulatory discourse. The need for agile regulation to address emerging and disruptive technologies is clearly present, but is the fear surrounding disinformation proportionate to the actual threat? How should policymakers and regulators approach the online disinformation phenomenon? And lastly, what are the main challenges in addressing and countering online disinformation?

This discussion piece will explore how access to social media data is key to providing an answer to these questions. Tech conglomerates have had, up until recently, uninterrupted control over the data gathered on their platforms. In doing so, platforms have been unable to identify and combat risks like disinformation, and they retain information and knowledge that could aid researchers in evidence-based policy-making. It is becoming increasingly evident that effective platform governance relies on the ability of independent researchers to access online platforms’ data. Expanding regulation to the digital sphere and to tech platforms could mean that Big Data can be utilized to combat disinformation, as well as to encourage platforms to address the controversial subject of content moderation on social media platforms in a much more transparent way. When data is shared and used safely, ethically, and systematically at scale, it represents a massive untapped resource for researchers to understand trends, develop policies, monitor the spread of mis/disinformation, and guide actions at the collective level. Enhancing researcher’s access to platform data will allow them to better identify organized disinformation campaigns, to understand users’ engagement with disinformation and to identify how platforms enable, facilitate, or amplify disinformation (Dutkiewicz 2021).

What is Disinformation?

For this piece, the term disinformation will be used synonymously with the term misinformation, despite the slight variance in their scope. Both of these terms refer to shared information that, in its untruthful nature, causes harm. Disinformation is defined by UNESCO as “information that is false and deliberately created to harm a person, social group, organization, or country”, whereas misinformation is not intended to cause harm. When examining shared information, there are three important elements that contribute to our understanding of disinformation:

- Types of content being created and shared (e.g., a joke, parody, false context, and fabricated content)

- The creator’s motivation (e.g., political or financial gain, reputation)

- How content is being disseminated (e.g., headline news, social media tweets or statuses, and data leaks).” (Ahmad et al., 2021, p. 106)

Another term of relevance is ‘Coordinated Inauthentic Behavior’ (CIB), defined by Meta as ‘coordinated efforts to manipulate public debate for a strategic goal, in which fake accounts are central to the operation. In each case, people coordinate with one another and use fake accounts to mislead others about who they are and what they are doing’ (‘Removing Coordinated Inauthentic Behavior From China and Russia’ 2022).

‘Threat to Democracy’: Actual Threat or Moral Panic?

The general discourse surrounding disinformation espouses deep suspicion and a dystopian fear of its threat to democracy, but what do we really mean when we say it threatens democracy? Disinformation possesses the ability to “upset” political discourse in the public arena, or what is commonly referred to as the ‘digital town square’. What this means is that democratic and civic discourse, along with the fourth industrial revolution, has moved largely online, and as a consequence, voices are being extended to those who might hold extremist views that threaten democratic ideals and institutions. This threat is best understood through its tendency to polarize public debate, giving way to ideological extremes. In addition to polarization, the complexified information environment becomes susceptible to influence operations such as Coordinated Inauthentic Behavior on behalf of domestic non-government, foreign or government actors. These campaigns are carried out with an intention to derail public debate in a saturated information environment, where individuals may be unable to discern what is true and what is not. The consequences of these campaigns can have implications on elections, public health and weaken trust in democratic institutions.

Researchers Andreas Jungherr and Ralph Schroeder state that there is a need for more attention given to the benefits of opening up the public arena – “a more noisy, impolite, and unrulier arena of political discourse might turn out to be a small price to pay for not increasing control by already powerful institutions” (Jungherr and Schroeder, 2021, p. 5). They make the argument that Facebook, Twitter, YouTube, and other social media platforms have increasingly acted as a counterbalance to the influence of traditional media institutions and political organizations in maintaining the information environment within democracies. While these new forms of information exchange do not employ the fact-checking systems utilized by traditional journalistic outlets, they could also be interpreted as expanding the plurality and the channels for alternative discourse to thrive in the public arena.

While there is truth in this perspective, it does not acknowledge the indisputable need for greater platform data transparency. We can collectively embrace plurality while also striving for mechanisms that grant researchers access to data for the continued development of regulatory frameworks surrounding disinformation.

Reimagining Data Access

There has been a spotlight on the role of content moderation in addressing disinformation, and while important, regulators are stressing the need to focus more generally on the regulation of platforms as disruptive industries. This point is illustrated well by understanding that “addressing disinformation primarily from an information quality perspective…resembles the doctor only treating a patient’s symptoms while missing the cause of the disease.” (Jungherr and Schroeder, 2021, p. 3). So how can policymakers develop policies around platforms and disinformation? The primary barrier to regulation is access to data.

Platforms have a massive reserve of information and knowledge, yet maintain control over access to data under the guise of privacy protection. Their unwillingness to share this data is more likely due to “privatization of data ecosystems, the lack of incentives for platforms in revealing what kind of users’ data they have and how they use it, corporate secrecy on platforms’ algorithmic practices and data protection concerns” (Dutkiewicz, 2021). Of course, data sharing raises major concerns over privacy, but it is possible for data to be granted in a privacy-preserving way, and used as a democratic tool that empowers citizens. Platforms and policymakers are still navigating this uncharted territory, and as a result we are seeing a push for legislative action on platform transparency, as well as increasing research and open-source tools becoming available to aid research and understanding in this area.

The adoption of the Digital Service Act (DSA) in July 2022 by the European Parliament marks the dawn of an era of increased digital data access, and is a step forward in legislation that aims to keep platforms transparent and accountable through independent oversight. One of the biggest achievements of the DSA is that it outlines a blueprint for allowing legally mandated access to data for research purposes (Dawning Digital Data Access via New EU Law 2022). Rita Jonušaitė, Advocacy Coordinator for EUDisinfoLab, states that it brings unprecedented levels of transparency in terms of all the data that will be available, including data on how platforms approach content moderation and the preventative measures they have in place. However, this framework needs further development when it comes to access that researchers will have to platform data in how they tackle systemic risks like disinformation (Jonušaitė 2022).

Currently, access to platform data is governed by contractual agreements, platforms’ own terms of service and Application Programming Interfaces (APIs). Article 31 of the DSA provides a specific provision that limits access to vetted researchers, meaning that the scope of access to data is ultimately narrow, allowing mainly university-affiliated researchers’ access. This provision fails to recognize the growing disinformation research community, consisting of journalists, fact-checkers, digital forensics experts, and open-source investigators. For example, there is increasing research on the problems of the social Internet by investigative journalist networks and organizations like the Digital Forensic Research Lab by the Atlantic Council, the Integrity Institute, the Global Disinformation Index or the Content Authenticity Initiative. Meanwhile, Democracy Reporting International has developed a threat registry, Disinfo Radar, which is an interactive online resource exploring new trends and tools in the disinformation environment. These organizations work to understand the systematic causes of phenomena such as disinformation, and combine expertise to theorize on how to mitigate them. As such, their efforts rely on continued data access, and illustrate the good use of data to build stronger democracies.

Despite this achievement in Europe, legislative action in the US has been delayed. There is hope that the DSA will, through the ‘Brussels effect’, serve as a framework that can be adopted by other countries and regions. As a landmark framework, the DSA is a move towards greater data transparency, but the practical application of the DSA has yet to be seen. There are still many questions surrounding the way in which content is delivered, moderated and pushed which need to be answered, and through the use of data, there are a number of opportunities for policymaking surrounding disinformation to develop.

Below, I detail some key ways in which data can be used in addressing disinformation online.

- Platform Design

With access to more social media data, socio-technical design of software to monitor and address disinformative content can be further developed. By enabling researchers, engineers, and designers to better understand these systems, they can produce work in open-source formats, which in turn can allow others to come up with new and innovative approaches to combating disinformation. Computer scientists and engineers should be considered as central policymakers / decision makers on the Internet, “as their technical work, whether intended as such or not, creates the rules by which the internet works.” (Kyza et al., 2020, p. 6).

- Open Source Research and Tools

With simple training, anyone can analyze open-source data, making research transparent and accessible. The data that can be extracted or ‘scraped’ from social media using APIs can be used to aid investigative journalists and to monitor discourse surrounding elections, conflict and democratic transitions. There are already a number of open-source tools that have been developed to extract, analyze and detect disinformation, such as CrowdTangle, an open-source tool created by Meta itself, which can be used to scrape social media data for research purposes, or TruthNest, an app that provides Twitter analytics that evaluate the credibility of content on this platform, as well as real-time analytics. There are, of course, limits to the type of data that can be collected by these tools, but they represent a valuable guidepost for further research and development.

- Stakeholder Theory and Collaborative Policymaking

The need for involvement of stakeholders in policymaking surrounding disinformation is key to develop policies that work. Stakeholder theory is a co-creation process which integrates views of various relevant actors and not only of “educated experts” (Kyza et al., 2020, p. 7). This form of collaboration, through data extraction and analysis, has the potential to understand the varying and interdependent interests of policy that impacts disinformation and overall content moderation. Harnessing the power of data can change the meaning of democratic participation by allowing individuals to be involved in decision-making in a manner that has never before occurred.

Conclusion

The new DSA marks the beginning of legally mandated access to data allowing researchers to better understand the digital town square, but at this stage, still requires further research and development. Through advocacy and policy angling for greater access to data that prioritizes human safety and privacy, while maintaining freedom of speech, researchers can better comprehend information within the online ecosystem, and policymakers can develop more effective policy proposals to address digital disinformation operations. A push for increased platform data transparency is, therefore, a push for evidence-based solutions to problems that pose a threat to our democracies in the digital age.

About the author: Jasmine Erkan is a Research Assistant with Data-Pop Alliance’s Just Digital Transformations Program. She currently resides in Germany.

References

- Ahmad, Norita, Nash Milic, and Mohammed Ibahrine. 2021. ‘Data and Disinformation’. Computer 54 (07): 105–10. https://doi.org/10.1109/MC.2021.3074261.

- ‘Dawning Digital Data Access via New EU Law’. 2022. Just Security. 20 October 2022. https://www.justsecurity.org/83622/dawning-digital-data-access-via-new-eu-law/.

- Dutkiewicz, Lidia. ‘From the DSA to Media Data Space: The Possible Solutions for the Access to Platforms’ Data to Tackle Disinformation’. European Law Blog (blog), 19 October 2021. https://europeanlawblog.eu/2021/10/19/from-the-dsa-to-media-data-space-the-possible-solutions-for-the-access-to-platforms-data-to-tackle-disinformation/.

- Jonušaitė, Rita. ‘The Big “R”: Regulation and Emerging Technologies in a Global Disinformation Ecosystem’ (Berlin, 6 December 2022), https://democracy-reporting.org/en/office/global/events/disinfocon-the-conference-that-brings-together-international-expert-to-discuss-upcoming-disinformation-challenges.

- Jungherr, Andreas, and Ralph Schroeder. 2021. ‘Disinformation and the Structural Transformations of the Public Arena: Addressing the Actual Challenges to Democracy’. Social Media + Society 7 (1). https://doi.org/10.1177/2056305121988928.

- Kapp, Julie M., Brian Hensel, and Kyle T. Schnoring. 2015. ‘Is Twitter a Forum for Disseminating Research to Health Policy Makers?’ Annals of Epidemiology 25 (12): 883–87. https://doi.org/10.1016/j.annepidem.2015.09.002.

- Kyza, Eleni A., Christiana Varda, Dionysis Panos, Melina Karageorgiou, Nadejda Komendantova, Serena Coppolino Perfumi, Syed Iftikhar Husain Shah, and Akram Sadat Hosseini. 2020. ‘Combating Misinformation Online: Re-Imagining Social Media for Policy-Making’. Internet Policy Review 9 (4). https://policyreview.info/articles/analysis/combating-misinformation-online-re-imagining-social-media-policy-making.

- Nimmo, Ben, and Mike Torrey. 2022. ‘Taking down Coordinated Inauthentic Behavior from Russia and China’. Meta. https://about.fb.com/news/2022/09/removing-coordinated-inauthentic-behavior-from-china-and-russia/.

- ‘Removing Coordinated Inauthentic Behavior From China and Russia’. 2022. Meta (blog). 27 September 2022. https://about.fb.com/news/2022/09/removing-coordinated-inauthentic-behavior-from-china-and-russia/.